The landscape of technology has experienced exponential growth and transformation from 1950 to 2024. This remarkable period has seen the rise of computers, the internet, mobile technology, and artificial intelligence, reshaping virtually every aspect of our lives. This detailed exploration will take you through the significant technological advancements over these decades, highlighting how they have impacted society, economy, and culture.

The 1950s: The Dawn of the Computer Age

Computing Beginnings

The 1950s marked the start of the digital era with the introduction of the first computers. One of the most notable early computers was the UNIVAC I (Universal Automatic Computer). Developed by J. Presper Eckert and John Mauchly, the UNIVAC I was the first computer available for commercial use. It was primarily used for business data processing and scientific calculations.

Early Programming Languages

This era also saw the birth of programming languages. In 1957, IBM introduced Fortran (short for Formula Translation), designed for scientific and engineering calculations. Fortran was significant because it introduced high-level programming concepts, making it easier for programmers to create complex software.

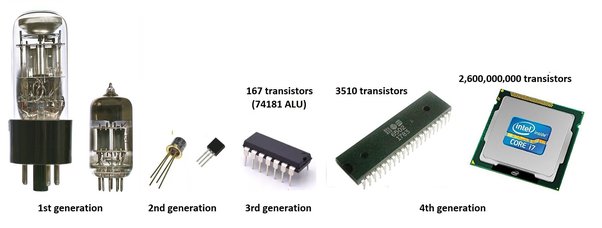

Transistors and Early Innovations

The transistor, invented in 1947 by John Bardeen, William Shockley, and Walter Brattain at Bell Labs, revolutionized technology. By the 1950s, transistors began to replace vacuum tubes in computers, making them more reliable, compact, and energy-efficient.

The 1960s: The Beginning of Space Exploration and Early Computer Networks

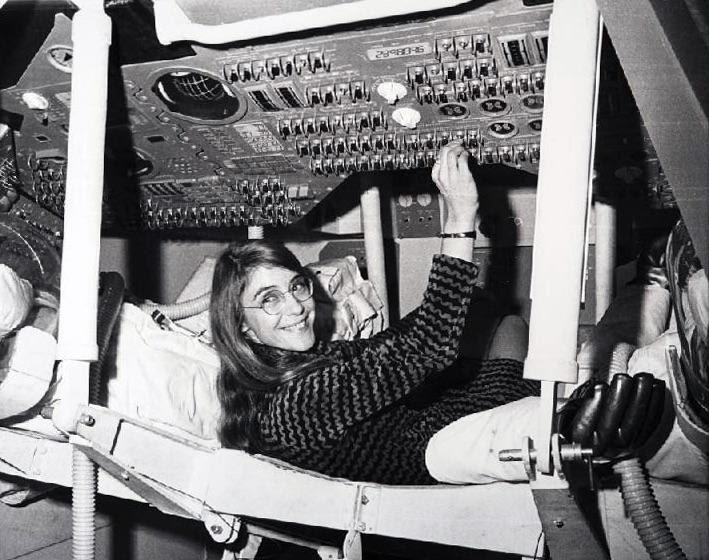

Space Race and Computers

The 1960s were marked by the space race, leading to many new technologies. NASA’s Apollo missions, especially the Apollo 11 moon landing in 1969, depended on advanced computers. The computers used to control the missions were some of the most sophisticated of their time, showing how important computers were becoming for managing complex tasks.

Early Computer Networks

The idea of computer networks started to develop in the 1960s. In 1969, ARPANET (Advanced Research Projects Agency Network) was created by the U.S. Department of Defense. This early network connected four university computers and was the foundation of what we now call the internet. ARPANET introduced packet switching, a method of sending data that is still used today.

Miniaturization and Integrated Circuits

The 1960s also saw the invention of integrated circuits (ICs) by Jack Kilby and Robert Noyce. ICs combined many tiny transistors onto a single chip, making electronic devices smaller and cheaper. This breakthrough led to the creation of more compact and affordable computers.

The 1970s: The Rise of Personal Computing and the Microprocessor

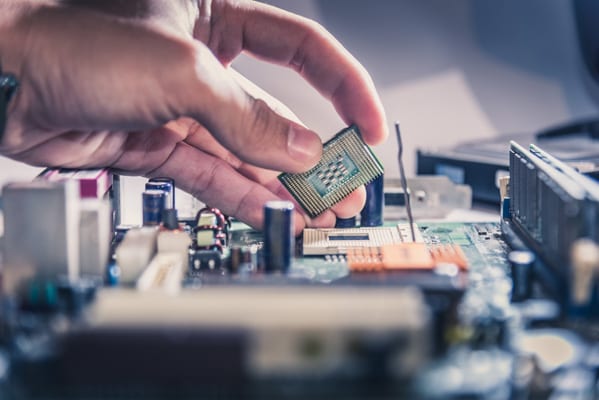

Microprocessor Revolution

In the 1970s, the microprocessor changed computing forever. In 1971, Intel launched the 4004, the first microprocessor available to the public. This small chip combined the computer’s central processing unit (CPU) into one component, making computers more affordable and accessible for both homes and businesses.

Personal Computers Enter the Market

The personal computer (PC) era kicked off in the 1970s with the release of computers like the Altair 8800 in 1975 and the Apple II in 1977. The Altair 8800, made by MITS, is often seen as the start of the personal computer revolution. The Apple II, designed by Steve Wozniak and Steve Jobs, was one of the first successful mass-produced PCs, known for its color graphics and open design that encouraged additional development by other companies.

Software and Operating Systems

During this decade, early software and operating systems began to emerge. Microsoft’s first product was a version of the BASIC programming language for the Altair 8800. In 1979, VisiCalc was introduced as the first spreadsheet application, which transformed business and financial calculations.

The 1980s: The Era of Networking and the Rise of the Internet

Growth of Personal Computing

The 1980s was a big time for personal computers. In 1981, IBM introduced its first PC, which became the standard for home computers. This PC was special because it had an open design, which meant other companies could make compatible computers and accessories. This led to more people getting personal computers.

Introduction of GUI and User-Friendly Interfaces

During the 1980s, computers became easier to use thanks to graphical user interfaces (GUIs). In 1984, Apple launched the Macintosh, one of the first PCs with a GUI, which used a mouse to navigate. Microsoft followed in 1985 with Windows 1.0, which also used a GUI and would later become very popular.

Networking and the Birth of the Internet

The 1980s also saw advances in networking technology. In 1983, ARPANET adopted the TCP/IP protocol, which is the basis for the internet today. In 1984, the Domain Name System (DNS) was created, making it simpler to find different computers on the network.

The Rise of the World Wide Web

In 1989, Tim Berners-Lee came up with the idea for the World Wide Web, which would become the modern internet. By 1991, the first website was up and running, starting the web’s journey as a place for public information.

The 1990s: The Internet Boom and Digital Revolution

Explosion of Internet Usage

In the 1990s, the internet became a huge deal. Web browsers like Mosaic (1993) and Netscape Navigator (1994) made it much easier to use the web. By the mid-1990s, the internet was opening up to businesses and online shopping, leading to a surge in internet use.

Dot-Com Boom and Bust

Toward the end of the 1990s, there was a lot of excitement and speculation about internet companies, known as the dot-com boom. Many new startups quickly grew and had high values. But by 2000, the bubble burst, causing many companies to fail and lose money. Even so, the internet continued to develop and set the stage for future innovations.

Advancements in Mobile Technology

The 1990s also brought big changes in mobile technology. The first SMS (text message) was sent in 1992, and mobile phones started to become more common. Companies like Nokia and Motorola introduced more advanced phones, paving the way for the smartphone era.

The 2000s: The Rise of Smartphones and Social Media

The Rise of Smartphones

In the 2000s, smartphones changed everything. In 2007, Apple launched the iPhone, which combined a phone, an iPod, and an internet device all in one. Its touchscreen and app store set new standards for mobile phones and changed how we use technology.

Social Media Takes Off

During this decade, social media exploded. Platforms like Facebook (2004), Twitter (2006), and YouTube (2005) became essential parts of our lives. They changed the way we communicate, share content, and interact with each other and businesses.

Better Internet Connections

Broadband and Wi-Fi technology became widely available in the 2000s, making internet connections faster and more reliable. This improvement helped the growth of streaming services, online gaming, and cloud computing, changing how we access and use digital content.

The 2010s: Big Data, Artificial Intelligence, and the Internet of Things

Big Data and Cloud Computing

The 2010s saw the rise of big data and cloud computing. The ability to collect, analyze, and leverage vast amounts of data became a significant advantage for businesses. Cloud computing platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud provided scalable and flexible computing resources, enabling companies to store and process data more efficiently.

Advancements in Artificial Intelligence

Artificial Intelligence (AI) began to make significant strides in the 2010s. Machine learning algorithms and deep learning techniques enabled computers to perform tasks such as image recognition, natural language processing, and autonomous driving. Notable advancements included IBM’s Watson, which won “Jeopardy!” in 2011, and the development of AI-powered virtual assistants like Siri, Alexa, and Google Assistant.

The Internet of Things (IoT)

The concept of the Internet of Things (IoT) gained traction, with devices and objects becoming interconnected through the internet. Smart home technologies, wearable devices, and connected appliances became increasingly prevalent, allowing users to monitor and control their environments remotely.

Blockchain and Cryptocurrencies

Blockchain technology emerged as a groundbreaking innovation in the 2010s. Initially popularized by cryptocurrencies like Bitcoin, blockchain offered a decentralized and secure way to record transactions. This technology has since found applications in various fields, including supply chain management, finance, and digital identity.